Total 257 Questions

Last Updated On : 2-Jun-2025

Preparing with Data-Architect practice test is essential to ensure success on the exam. This Salesforce SP25 test allows you to familiarize yourself with the Data-Architect exam questions format and identify your strengths and weaknesses. By practicing thoroughly, you can maximize your chances of passing the Salesforce certification spring 2025 release exam on your first attempt. Surveys from different platforms and user-reported pass rates suggest Data-Architect practice exam users are ~30-40% more likely to pass.

Universal Containers has received complaints that customers are being called by multiple Sales Reps where the second Sales Rep that calls is unaware of the previous call by their coworker. What is a data quality problem that could cause this?

A.

Missing phone number on the Contact record.

B.

Customer phone number has changed on the Contact record.

C.

Duplicate Contact records exist in the system.

D.

Duplicate Activity records on a Contact.

Duplicate Contact records exist in the system.

Explanation:

Duplicate Contact records fragment customer data across multiple records. When Sales Rep A logs a call on one duplicate, Sales Rep B viewing a different duplicate record won’t see that activity. This breaks data visibility and causes redundant outreach. Option A (missing phone) or B (changed number) might prevent calls but doesn’t explain reps’ unawareness of colleagues’ actions. Option D (duplicate Activities) would create redundant logs on the same Contact but wouldn’t hide activities from other reps. Only duplicate Contacts create isolated data silos. Salesforce’s sharing model grants access to records, but if reps work on different duplicates, activities remain invisible across duplicates. Merging duplicates consolidates activities and prevents this issue.

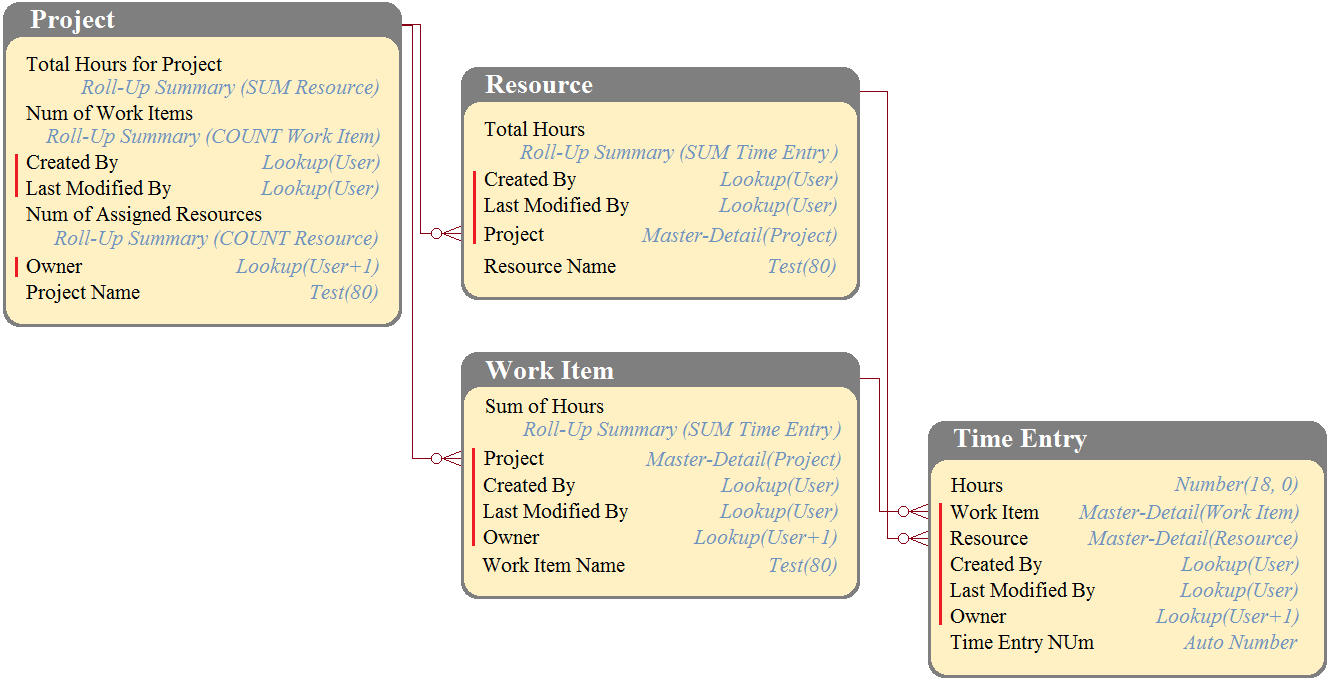

DreamHouse Realty has a data model as shown in the image. The Project object has a private sharing model, and it has Roll-Up summary fields to calculate the number of resources assigned to the project, total hours for the project, and the number of work items associated to the project. There will be a large amount of time entry records to be loaded regularly from an external system into Salesforce.

What should the Architect consider in this situation?

A.

Load all data after deferring sharing calculations.

B.

Calculate summary values instead of Roll-Up by using workflow.

C.

Calculate summary values instead of Roll-Up by using triggers.

D.

Load all data using external IDs to link to parent records.

Load all data after deferring sharing calculations.

Explanation:

When loading a large volume of records into an object with private sharing and roll-up summary fields, performance is critical. Deferring sharing calculations during the load process helps improve efficiency and reduces processing time. This is particularly useful with complex roll-ups on the parent object like Project in this scenario.

Incorrect Options:

B & C. Workflow/Triggers for summary: These approaches are less scalable and harder to maintain than native Roll-Up fields, and they introduce unnecessary complexity.

D. Use external IDs: This helps link records efficiently but does not address the sharing model performance issue, which is the real concern here.

Universal Containers (UC) has built a custom application on Salesforce to help track shipments around the world. A majority of the shipping records are stored on premise in an external data source. UC needs shipment details to be exposed to the custom application, and the data needs to be accessible in real time. The external data source is not OData enabled, and UC does not own a middleware tool. Which Salesforce Connect procedure should a data architect use to ensure UC's requirements are met?

A.

Write an Apex class that makes a REST callout to the external API.

B.

Develop a process that calls an inviable web service method.

C.

Migrate the data to Heroku and register Postgres as a data source.

D.

Write a custom adapter with the Apex Connector Framework.

Write a custom adapter with the Apex Connector Framework.

Explanation:

The Apex Connector Framework enables building custom adapters for Salesforce Connect, creating virtual "external objects" that mirror real-time external data without replication. This meets UC’s real-time requirement for non-OData systems. Option A (REST callout) requires custom Apex but doesn’t integrate natively as objects. Option B is vague and impractical. Option C (Heroku) violates the "external data source" mandate by requiring migration. A custom adapter (D) uses Apex to translate external API responses into Salesforce-readable formats via OData 4.0 proxies. Shipment data appears as external objects in Salesforce, accessible via SOQL, reports, and layouts – solving real-time visibility without middleware.

Universal Container (UC) has accumulated data over years and has never deleted data from its Salesforce org. UC is now exceeding the storage allocations in the org. UC is now looking for option to delete unused from the org. Which three recommendations should a data architect make is order to reduce the number of records from the org? Choose 3 answers

A.

Use hard delete in Bulk API to permanently delete records from Salesforce.

B.

Use hard delete in batch Apex to permanently delete records from Salesforce.

C.

Identify records in objects that have not been modified or used In last 3 years.

D.

Use Rest API to permanently delete records from the Salesforce org.

E.

Archive the records in enterprise data warehouse (EDW) before deleting from Salesforce.

Use hard delete in Bulk API to permanently delete records from Salesforce.

Identify records in objects that have not been modified or used In last 3 years.

Archive the records in enterprise data warehouse (EDW) before deleting from Salesforce.

Explanation:

A: Bulk API hard-deletes bypass the Recycle Bin, permanently removing records at scale (up to 10k records/job). This reclaims storage instantly.

C: Identifying unused records (e.g., via SOQL LastModifiedDate) targets deletion efforts efficiently. Objects like Tasks, old Cases, or Logs are prime candidates.

E: Archiving to an EDW preserves compliance for 5-year policies before irreversible deletion.

Excluded Options:

B: Batch Apex risks governor limits (e.g., 10k DML/transaction) and is less efficient than Bulk API.

D: REST API has lower throughput than Bulk API for mass deletion.

Archiving (E) is critical: Salesforce storage is expensive, while cloud EDWs (e.g., Snowflake) offer cheaper long-term retention.

North Trail Outfitters (NTO) operates a majority of its business from a central Salesforce org, NTO also owns several secondary orgs that the service, finance, and marketing teams work out of, At the moment, there is no integration between central and secondary orgs, leading to data-visibility issues. Moving forward, NTO has identified that a hub-and-spoke model is the proper architect to manage its data, where the central org is the hub and the secondary orgs are the spokes. Which tool should a data architect use to orchestrate data between the hub org and spoke orgs?

A.

A middleware solution that extracts and distributes data across both the hub and spokes.

B.

Develop custom APIs to poll the hub org for change data and push into the spoke orgs.

C.

Develop custom APIs to poll the spoke for change data and push into the org.

D.

A backup and archive solution that extracts and restores data across orgs.

A middleware solution that extracts and distributes data across both the hub and spokes.

Explanation:

Middleware (e.g., MuleSoft, Informatica) centralizes bidirectional data orchestration in hub-spoke architectures. It polls the hub for changes and pushes delta updates to spokes (and vice versa) in near-real-time. Custom APIs (B/C) require building polling logic, error handling, and security – creating maintenance overhead. Option B’s "poll hub → push spokes" misses spoke-to-hub syncs. Option C inverts this incorrectly. Backup tools (D) copy data but don’t synchronize live changes. Middleware handles conflict resolution, logging, and scalability across orgs, making it the only sustainable solution for ongoing data visibility.

Get Cloudy Consulting monitors 15,000 servers, and these servers automatically record their status every 10 minutes. Because of company policy, these status reports must be maintained for 5 years. Managers at Get Cloudy Consulting need access to up to one week's worth of these status reports with all of their details. An Architect is recommending what data should be integrated into Salesforce and for how long it should be stored in Salesforce. Which two limits should the Architect be aware of? (Choose two.)

A.

Data storage limits

B.

Workflow rule limits

C.

API Request limits

D.

Webservice callout limits

Data storage limits

API Request limits

Explanation:

A. Data storage limits: Storing 5 years’ worth of status updates for 15,000 servers would easily exceed Salesforce storage limits.

C. API request limits: Continuous and frequent updates (every 10 minutes) could breach daily API request limits.

Incorrect Options:

B. Workflow rule limits: Irrelevant unless using workflows, which is not stated.

D. Webservice callout limits: Applies when Salesforce initiates outbound calls, not the scenario here.

UC has one SF org (Org A) and recently acquired a secondary company with its own Salesforce org (Org B). UC has decided to keep the orgs running separately but would like to bidirectionally share opportunities between the orgs in near-real time. Which 3 options should a data architect recommend to share data between Org A and Org B? Choose 3 answers.

A.

Leverage Heroku Connect and Heroku Postgres to bidirectionally sync Opportunities.

B.

Install a 3rd party AppExchange tool to handle the data sharing

C.

Develop an Apex class that pushes opportunity data between orgs daily via the Apex schedule.

D.

Leverage middleware tools to bidirectionally send Opportunity data across orgs.

E.

Use Salesforce Connect and the cross-org adapter to visualize Opportunities into external objects

Leverage Heroku Connect and Heroku Postgres to bidirectionally sync Opportunities.

Install a 3rd party AppExchange tool to handle the data sharing

Leverage middleware tools to bidirectionally send Opportunity data across orgs.

Explanation:

A. Heroku Connect: Syncs data in near real-time using Heroku Postgres and Connect.

B. AppExchange tool: Pre-built solutions can offer low-code integration capabilities.

D. Middleware tools: Robust and scalable for real-time and bidirectional syncing.

Incorrect Options:

C. Scheduled Apex: Not near real-time and lacks error handling and flexibility.

E. Salesforce Connect: Best for real-time viewing, not syncing or modifying data.

Universal Containers (UC) has an open sharing model for its Salesforce users to allow all its Salesforce internal users to edit all contacts, regardless of who owns the contact. However, UC management wants to allow only the owner of a contact record to delete that contact. If a user does not own the contact, then the user should not be allowed to delete the record. How should the architect approach the project so that the requirements are met?

A.

Create a "before delete" trigger to check if the current user is not the owner.

B.

Set the Sharing settings as Public Read Only for the Contact object.

C.

Set the profile of the users to remove delete permission from the Contact object.

D.

Create a validation rule on the Contact object to check if the current user is not the owner.

Create a "before delete" trigger to check if the current user is not the owner.

Explanation:

Triggers can enforce complex logic such as permission overrides based on ownership. A "before delete" trigger can check the current user's ID against the record owner and prevent deletion accordingly, satisfying the requirement precisely.

Incorrect Options:

B. Public Read Only: Prevents editing, not deletion. Also contradicts the open sharing model.

C. Profile-based delete restriction: Applies universally, not conditionally by owner.

D. Validation rule: Validation rules do not fire on delete events.

Universal Containers wishes to maintain Lead data from Leads even after they are deleted and cleared from the Recycle Bin. What approach should be implemented to achieve this solution?

A.

Use a Lead standard report and filter on the IsDeleted standard field.

B.

Use a Converted Lead report to display data on Leads that have been deleted.

C.

Query Salesforce with the queryAll API method or using the ALL ROWS SOQL keywords.

D.

Send data to a Data Warehouse and mark Leads as deleted in that system.

Send data to a Data Warehouse and mark Leads as deleted in that system.

Explanation:

Leads deleted and purged from the Recycle Bin are permanently unrecoverable in Salesforce.

Options A-C fail:

1. Reports (A/B) can’t access purged records.

2. queryAll (C) retrieves records from Recycle Bin but not after purge.

Archiving Leads to a data warehouse (D) preserves historical data. Automation (e.g., trigger) can flag Leads as "deleted" in the EDW during Salesforce deletion. This complies with data retention policies without consuming Salesforce storage.

Universal Containers (UC) is implementing Salesforce and will be using Salesforce to track customer complaints, provide white papers on products, and provide subscription based support. Which license type will UC users need to fulfill UC's requirements?

A.

Sales Cloud License

B.

Lightning Platform Starter License

C.

Service Cloud License

D.

Salesforce License

Service Cloud License

Explanation:

UC’s needs include tracking complaints (cases), offering white papers (knowledge base), and managing subscription-based support, all of which are covered under the Service Cloud license. It includes features such as Cases, Knowledge, and Entitlements.

Incorrect Options:

A. Sales Cloud: Focused on Leads, Opportunities—not sufficient for service use cases.

B. Lightning Platform Starter: Very limited functionality, not intended for service support.

D. Salesforce License: Generic term, not a specific license type.

| Page 8 out of 26 Pages |

| Data-Architect Practice Test Home | Previous |