Total 273 Questions

Last Updated On : 27-Feb-2026

A Mule application is running on a customer-hosted Mule runtime in an organization's

network. The Mule application acts as a producer of asynchronous Mule events. Each Mule

event must be broadcast to all interested external consumers outside the Mule application.

The Mule events should be published in a way that is guaranteed in normal situations and

also minimizes duplicate delivery in less frequent failure scenarios.

The organizational firewall is configured to only allow outbound traffic on ports 80 and 443.

Some external event consumers are within the organizational network, while others are

located outside the firewall.

What Anypoint Platform service is most idiomatic (used for its intended purpose) for

publishing these Mule events to all external consumers while addressing the desired

reliability goals?

A. CloudHub VM queues

B. Anypoint MQ

C. Anypoint Exchange

D. CloudHub Shared Load Balancer

Explanation

Let's break down the key requirements and constraints from the question:

Producer of Asynchronous Events:

The Mule application needs to send messages without waiting for an immediate response.

Broadcast to All Interested Consumers:

This is a classic publish-subscribe (pub/sub) messaging pattern. A single message from the producer should be received by multiple, independent consumers.

Guaranteed Delivery & Minimized Duplicates:

This requires a robust, managed messaging service that offers persistence, acknowledgments, and once-and-only-once delivery semantics.

Firewall Constraints (Ports 80/443 only):

The solution must communicate over standard web protocols (HTTP/HTTPS). This rules out solutions that require custom, non-web ports.

Mixed Consumer Locations (Inside/Outside Firewall):

The solution must be accessible from both within the organizational network and from the public internet.

Why Anypoint MQ is the Correct Choice:

Intended Purpose:

Anypoint MQ is a fully managed, cloud-based messaging service (SaaS) specifically designed for reliable, asynchronous communication between applications, whether they are in the cloud or on-premises.

Publish-Subscribe Model:

It natively supports the concept of Exchanges and Queues. The Mule application can publish an event to a Fanout Exchange, which will then route a copy of the message to every queue subscribed to that exchange. Each external consumer can have its own dedicated queue, achieving the "broadcast to all" requirement.

Reliability & Guaranteed Delivery:

Messages are persisted until they are successfully delivered to and acknowledged by all subscribed queues. This provides the required guarantee in normal situations. Its built-in mechanisms help minimize duplicates, addressing the failure scenario requirement.

Firewall Compatibility:

As a cloud service, Anypoint MQ is accessible via HTTPS (port 443), perfectly satisfying the firewall constraint.

Accessibility:

Being a cloud service, it acts as a central, accessible endpoint for consumers both inside the corporate network (outbound HTTPS is allowed) and outside on the public internet.

Why the Other Options Are Incorrect:

A. CloudHub VM Queues:

These are queues that exist within a single CloudHub worker. They are designed for internal communication between Mule applications running on the same VPC (Virtual Private Cloud) on CloudHub. They are not accessible from outside the CloudHub VPC, making them unsuitable for external consumers, especially those hosted on-premises or in other networks.

C. Anypoint Exchange:

Exchange is the center for collaboration and asset sharing, not for real-time message delivery. It is where you publish API specifications, connectors, templates, and documentation. It is not a runtime messaging service and cannot receive or broadcast Mule events.

D. CloudHub Shared Load Balancer (SLB):

The SLB is responsible for routing HTTP/HTTPS requests to the correct Mule application within CloudHub. It is an ingress component for request-response flows. It is not designed for asynchronous, publish-subscribe messaging and does not provide message persistence or guaranteed delivery to multiple external subscribers.

Reference / Key Topic:

Anypoint MQ Documentation:

The core concepts of Exchanges, Queues, and Message Persistence are fundamental. An architect should understand the differences between point-to-point (queues) and publish-subscribe (exchanges) patterns.

Anypoint Platform Services:

Understanding the distinct purpose of each service (Exchange for design-time, MQ for runtime messaging, CloudHub for hosting) is crucial for making the correct architectural choice.

In summary, Anypoint MQ is the idiomatic, purpose-built service for creating reliable, asynchronous, and scalable integrations that span network boundaries, exactly as described in this scenario.

A rale limiting policy has been applied to a soap VI.2 API published in Clondhub. The API implementation catches errors in a global error handler on error propagate in the main flow for HTTP: RETRY_EXHAUSTED with HTTP status set to 429 and any with the HTTP status set to 500. What is the expected H1TP status when the client exceeds the quota of the API calls?

A. HTTP status 429 as defined in the HTTP:RETRY EXHAUSTED error handler in the API

B. HTTP status 500 as defined in the ANY error handler in the API since an API:RETRY_EXHAUSTED will be generated

C. HTTP status 401 unauthorized for policy violation

D. HTTP status 400 from the rate-limiting policy violation since the call does not reach the back-end

Explanation:

When a rate-limiting policy is applied to a SOAP v1.2 API published in CloudHub, and the client exceeds the API call quota, the rate-limiting policy triggers a specific error condition. In MuleSoft, when the rate limit is exceeded, the API Gateway typically generates an API:RETRY_EXHAUSTED error. The global error handler in the API implementation is configured to catch this HTTP:RETRY_EXHAUSTED error and return an HTTP status code of 429 (Too Many Requests), as explicitly stated in the question.

Here’s a breakdown of why the other options are incorrect:

B. HTTP status 500 as defined in the ANY error handler in the API since an API:RETRY_EXHAUSTED will be generated:

This is incorrect because the global error handler explicitly maps HTTP:RETRY_EXHAUSTED to HTTP status 429. The ANY error handler (which catches unhandled errors and returns HTTP 500) would only apply if the error is not specifically handled by the HTTP:RETRY_EXHAUSTED handler. Since the error is explicitly caught, the 429 status takes precedence.

C. HTTP status 401 unauthorized for policy violation:

This is incorrect because HTTP 401 (Unauthorized) is typically used for authentication failures, such as invalid credentials. Rate-limiting violations do not relate to authentication but rather to exceeding allowed request quotas, which aligns with HTTP 429.

D. HTTP status 400 from the rate-limiting policy violation since the call does not reach the back-end:

This is incorrect because HTTP 400 (Bad Request) is used for malformed requests or invalid client input. Rate-limiting violations are specifically handled with HTTP 429 in MuleSoft, as this status code is designed for cases where the client has sent too many requests in a given time frame.

References:

MuleSoft Documentation: MuleSoft’s API Manager and rate-limiting policies specify that exceeding the rate limit results in an HTTP 429 status code. The HTTP:RETRY_EXHAUSTED error is mapped to this status when configured in the error handler.

HTTP Status Codes (RFC 7231): The HTTP 429 (Too Many Requests) status code is defined in RFC 6585 as the appropriate response for rate-limiting violations.

MuleSoft Error Handling: The MuleSoft documentation on error handling explains how global error handlers can map specific errors (like HTTP:RETRY_EXHAUSTED) to custom HTTP status codes.

A retailer is designing a data exchange interface to be used by its suppliers. The interface must support secure communication over the public internet. The interface must also work with a wide variety of programming languages and IT systems used by suppliers. What are suitable interface technologies for this data exchange that are secure, crossplatform, and internet friendly, assuming that Anypoint Connectors exist for these interface technologies?

A. EDJFACT XML over SFTP JSON/REST over HTTPS

B. SOAP over HTTPS HOP over TLS gRPC over HTTPS

C. XML over ActiveMQ XML over SFTP XML/REST over HTTPS

D. CSV over FTP YAML over TLS JSON over HTTPS

Explanation:

This is the correct answer because it provides a balanced and realistic portfolio of integration styles that meet all the requirements: security, cross-platform compatibility, and internet-friendliness.

JSON/REST over HTTPS:

This is the cornerstone of a modern, internet-friendly API.

Secure:

HTTPS provides transport-layer security (encryption and authentication), which is the standard for secure communication over the public internet.

Cross-Platform:

REST is an architectural style, not a protocol. JSON is a simple, text-based data format. Virtually every modern programming language and platform has built-in support for HTTP, REST principles, and JSON parsing. This maximizes compatibility with the suppliers' diverse IT systems.

Internet-Friendly:

RESTful APIs are designed for the web. They use standard HTTP methods (GET, POST, PUT, DELETE) and are stateless, making them highly scalable and easy to consume.

XML over SFTP:

This is an excellent choice for batch-based or asynchronous data exchange.

Secure:

SFTP (SSH File Transfer Protocol) provides secure file transfer over a secure shell (SSH) data stream. It's a well-established standard for secure file movement.

Cross-Platform:

XML is a widely supported, structured data format. SFTP clients are available for almost every operating system.

Use Case:

This would be suitable for suppliers who need to send large files (e.g., daily inventory updates, bulk orders) rather than interacting with a real-time API.

EDIFACT:

While a legacy standard (EDI), it is still critically important in B2B scenarios, especially with large suppliers in retail, manufacturing, and logistics.

The inclusion of EDIFACT acknowledges that in a real-world retailer-supplier relationship, supporting established EDI standards is often a non-negotiable business requirement. The MuleSoft EDI module can translate EDIFACT to/from a more modern JSON/XML format internally.

This combination (A) offers a layered approach:

real-time APIs (REST/HTTPS) for interactive processes, secure file transfer (SFTP) for bulk data, and support for mandatory B2B standards (EDIFACT).

Analysis of Other Options:

B. SOAP over HTTPS JMS over TLS gRPC over HTTPS

SOAP/HTTPS:

While secure and cross-platform (via WS-* standards), SOAP is generally considered more heavyweight, complex, and less "internet-friendly" than REST. It's a valid but less ideal choice for broad supplier adoption.

JMS over TLS:

JMS (Java Message Service) is a Java-specific API for messaging systems (like ActiveMQ). It is not cross-platform; it ties the solution to the Java ecosystem, violating a core requirement. While TLS can secure the connection, the protocol itself is not suitable for a wide variety of languages.

gRPC over HTTPS:

gRPC is a modern, high-performance RPC framework. However, it uses HTTP/2 and Protocol Buffers, which, while efficient, lack the universal, out-of-the-box support that JSON/REST has. It can be more challenging for some programming languages to consume.

C. XML over ActiveMQ XML over SFTP XML/REST over HTTPS

XML over ActiveMQ:

Similar to JMS in option B, ActiveMQ is a specific messaging broker. While clients exist for other languages, it introduces more complexity than a simple REST endpoint or SFTP server for connecting diverse systems. It's better suited for internal, asynchronous communication within a controlled environment.

This option is weaker than A because it lacks support for the critical JSON format and the essential EDIFACT standard, while pushing a less universal technology (ActiveMQ).

D. CSV over FTP YAML over TLS JSON over HTTPS

CSV over FTP:

This is a critical error. FTP (File Transfer Protocol) transmits data, including passwords, in clear text. It is not secure and must not be used over the public internet. SFTP or FTPS should always be used instead.

YAML over TLS:

While YAML is a valid data format, it is far less common than JSON or XML for API contracts. TLS provides security, but YAML is not a standard for web APIs, making it a poor choice for ensuring cross-platform compatibility.

The presence of the insecure FTP immediately disqualifies this option.

Key Concepts/References:

API-Led Connectivity: The correct answer reflects this approach. The Experience Layer (JSON/REST API) is easy for suppliers to consume, while the Process Layer orchestrates the underlying systems, which may involve translating to/from EDI (EDIFACT) or processing batch files (SFTP).

Security: The emphasis is on secure protocols: HTTPS (TLS/SSL) for web traffic and SFTP (over SSH) for file transfer. Insecure protocols like FTP are unacceptable.

Interoperability: The solution must use open, widely adopted standards (REST, JSON, XML) rather than technology-specific or niche protocols (JMS, gRPC, YAML).

B2B/EDI Integration: Recognizing that real-world scenarios, especially in retail, often require support for traditional EDI standards like EDIFACT or X12 alongside modern APIs.

Which Salesforce API is invoked to deploy, retrieve, create or delete customization information such as custom object definitions using a Mule Salesforce connector in a Mule application?

A. Metadata API

B. REST API

C. SOAP API

D. Bulk API

Explanation:

The Salesforce Metadata API is specifically designed for managing Salesforce customization and configuration, which is referred to as "metadata."

Purpose:

The Metadata API is used to manipulate the structural aspects of a Salesforce org. This includes operations on custom objects, fields, page layouts, workflows, Apex classes, and much more.

Operations:

The key verbs in the question—deploy, retrieve, create, delete—are the core operations of the Metadata API. For example, you would use the retrieve operation to get a package of metadata from a Salesforce org and the deploy operation to add it to another.

MuleSoft Connector:

The Anypoint Connector for Salesforce provides specific operations under the "Metadata" category (e.g., Create Metadata, Delete Metadata, Update Metadata, Retrieve Metadata). When you use these operations in your Mule application, the connector is making calls to the underlying Salesforce Metadata API.

The question explicitly mentions "customization information such as custom object definitions." This is the definitive domain of the Metadata API.

Analysis of Other Options:

B. REST API:

The Salesforce REST API is primarily used for performing CRUD (Create, Read, Update, Delete) operations on the data records (e.g., Account, Contact, Custom Object records) within Salesforce. It is not used for modifying the schema or definition of the objects themselves.

C. SOAP API:

The Salesforce SOAP API is an older, but still fully supported, API that also focuses on data manipulation (CRUD) and some administrative tasks. Similar to the REST API, its primary purpose is interacting with records, not the object definitions. The SOAP API's WSDL does include a partner WSDL for data, but the Metadata API has its own, separate WSDL.

D. Bulk API:

The Bulk API is a specialized extension of the REST API designed for loading or deleting very large sets of data records asynchronously. It is optimized for handling millions of records. It has no functionality for managing object definitions or other metadata.

Key Concepts/References:

Salesforce APIs:

An Integration Architect must understand the distinct purposes of the different Salesforce APIs:

Data APIs (REST, SOAP, Bulk):

For working with records.

Metadata API:

For working with the schema and configuration.

Other APIs:

There are also specific APIs for Analytics, Streaming, etc., but the core distinction is between Data and Metadata.

Anypoint Connector Configuration:

When building integrations, selecting the correct operation within a connector is crucial. Knowing that metadata operations are separate from data operations prevents architectural mistakes.

MuleSoft Documentation:

The official documentation for the Salesforce Connector clearly separates its operations into categories like "Core" (for data) and "Metadata."

A mule application is required to periodically process large data set from a back-end database to Salesforce CRM using batch job scope configured properly process the higher rate of records. The application is deployed to two cloudhub workers with no persistence queues enabled. What is the consequence if the worker crashes during records processing?

A. Remaining records will be processed by a new replacement worker

B. Remaining records be processed by second worker

C. Remaining records will be left and processed

D. All the records will be processed from scratch by the second worker leading to duplicate processing

Explanation:

The key to this question lies in understanding two critical concepts: Batch Job Persistence and CloudHub Worker Independence.

Batch Job State Persistence:

For a Batch Job to be resilient to failures, its state (which records have been loaded, which are being processed, which have failed, etc.) must be persisted to a durable store. Mule uses an Object Store for this purpose. There are two types of Object Stores relevant here:

In-Memory Object Store:

Volatile and lives only in the RAM of the specific worker JVM.

Persistent Object Store:

Durable and shared across workers, typically using a database.

CloudHub Worker Independence:

Each CloudHub worker is a separate JVM instance. They do not share memory. If Worker 1 crashes, Worker 2 has no inherent knowledge of what Worker 1 was doing.

The Critical Configuration:

"no persistence queues enabled": This phrase is the decisive clue. It indicates that the Batch Job is not configured to use a persistent Object Store. Instead, it relies on the default in-memory Object Store.

Therefore, the sequence of events is:

The batch job starts on, for example, Worker 1.

It begins processing records, storing its progress in its in-memory Object Store.

Worker 1 crashes.

The CloudHub load balancer detects the failure and routes all new traffic (including the next poll from the source) to the healthy Worker 2.

Worker 2 starts the batch job from the beginning. It has no way of knowing which records Worker 1 already processed because that state was lost when Worker 1's JVM died.

This results in the entire dataset being processed again, leading to duplicate records in Salesforce.

Analysis of Other Options:

A. Remaining records will be processed by a new replacement worker:

This would only be true if the Batch Job was configured with a persistent Object Store. The "new replacement worker" would be able to read the previous state and resume. Since persistence is disabled, this is incorrect.

B. Remaining records be processed by second worker:

This is incorrect for the same reason as A. The second worker is completely independent and unaware of the first worker's progress.

C. Remaining records will be left unprocessed:

This is incorrect because the application is still running on the second worker. The source (e.g., a database poll) will trigger again, and the batch job will execute. The records are not "left"; they are reprocessed in full.

Key Concepts/References:

Batch Job Reliability:

The official MuleSoft documentation explicitly states that for a Batch Job to be reliable in a multi-worker CloudHub environment, it must be configured to use a persistent Object Store. Failure to do so makes the batch instance non-resilient.

Object Store Viability:

An Integration Architect must understand the difference between in-memory and persistent object stores and their implications for high availability.

CloudHub Runtime Architecture:

Knowledge that workers are stateless and independent is fundamental. Any state that needs to survive a worker failure must be externalized (e.g., to a database, Redis, or a shared persistent file system).

Reference:

MuleSoft Documentation - Batch Job Processing (Specifically, sections on "Object Stores" and "High Availability").

How should the developer update the logging configuration in order to enable this package specific debugging?

A. In Anypoint Monitoring, define a logging search query with class property set to org.apache.cxf and level set to DEBUG

B. In the Mule application's log4j2.xml file, add an AsyncLogger element with name property set to org.apache.cxf and level set to DEBUG, then redeploy the Mule application in the CloudHub production environment

C. In the Mule application's log4j2.xmI file, change the root logger's level property to DEBUG, then redeploy the Mule application to the CloudHub production environment

D. In Anypoint Runtime Manager, in the Deployed Application Properties tab for the Mule application, add a line item with DEBUG level for package org.apache.cxf and apply the changes

Explanation:

To enable package-specific debugging for org.apache.cxf in a MuleSoft application, you need to configure logging at the package level in the Mule application's log4j2.xml configuration file. The log4j2.xml file is used to define logging behavior in Mule applications, including setting specific log levels for individual packages or classes. Here's why option B is correct and why the other options are incorrect:

Option B (Correct):

Adding an AsyncLogger element with the name property set to org.apache.cxf and the level set to DEBUG in the log4j2.xml file allows you to enable debugging specifically for the org.apache.cxf package without affecting other loggers. After updating the log4j2.xml file, redeploying the Mule application to CloudHub ensures that the new logging configuration takes effect. This is the standard and precise way to configure package-specific logging in MuleSoft.

Option A (Incorrect):

Anypoint Monitoring is used for searching and analyzing logs, not for configuring the logging behavior of a Mule application. Defining a logging search query with the class property set to org.apache.cxf and level set to DEBUG would only filter existing logs for that package at the DEBUG level but would not enable DEBUG logging if it’s not already configured in the log4j2.xml file. This option does not change the application’s logging behavior.

Option C (Incorrect):

Changing the root logger’s level to DEBUG in the log4j2.xml file would enable DEBUG logging for all packages and classes in the application, not just org.apache.cxf. This is not ideal for production environments, as it generates excessive log output, which can impact performance and make it harder to focus on the specific debugging information needed for org.apache.cxf.

Option D (Incorrect):

Anypoint Runtime Manager’s Deployed Application Properties tab is used to configure runtime properties (e.g., environment variables or application-specific properties) but does not support direct configuration of logging levels for specific packages like org.apache.cxf. Logging configuration must be done in the log4j2.xml file, not through Runtime Manager properties.

References:

MuleSoft Documentation: The MuleSoft documentation on logging explains how to configure the log4j2.xml file for custom logging, including package-specific log levels using AsyncLogger elements. See the "Logging in Mule" section in the MuleSoft Developer Portal.

Log4j2 Documentation: The Apache Log4j2 documentation details how to configure loggers, including the use of AsyncLogger for specific packages and setting log levels like DEBUG.

CloudHub Deployment: Redeploying a Mule application is necessary to apply changes to the log4j2.xml file, as logging configurations are bundled with the application.

A set of integration Mule applications, some of which expose APIs, are being created to enable a new business process. Various stakeholders may be impacted by this. These stakeholders are a combination of semi-technical users (who understand basic integration terminology and concepts such as JSON and XML) and technically skilled potential consumers of the Mule applications and APIs. What Is an effective way for the project team responsible for the Mule applications and APIs being built to communicate with these stakeholders using Anypoint Platform and its supplied toolset?

A. Use Anypoint Design Center to implement the Mule applications and APIs and give the various stakeholders access to these Design Center projects, so they can collaborate and provide feedback

B. Create Anypoint Exchange entries with pages elaborating the integration design, including API notebooks (where applicable) to help the stakeholders understand and interact with the Mule applications and APIs at various levels of technical depth

C. Use Anypoint Exchange to register the various Mule applications and APIs and share the RAML definitions with the stakeholders, so they can be discovered

D. Capture documentation about the Mule applications and APIs inline within the Mule integration flows and use Anypoint Studio's Export Documentation feature to provide an HTML version of this documentation to the stakeholders

Explanation:

This is the most effective and comprehensive answer because it directly addresses the need to communicate with a mixed audience of stakeholders at their respective levels of technical understanding.

Centralized Discovery (Exchange):

Anypoint Exchange is the central hub for discoverability and sharing within the Anypoint Platform. It is the intended place to publish assets for stakeholders.

Tailored Communication (Pages):

Exchange allows you to create rich, custom pages. This is perfect for:

Semi-technical users:

You can create pages with high-level overviews, business process diagrams, and non-technical explanations of the API's purpose and impact.

Technical consumers:

You can include detailed technical specifications, architectural diagrams, and links to the API specification (RAML/OAS).

Interactive Understanding (API Notebooks):

This is the key differentiator. API Notebooks provide an interactive, scriptable environment within the browser. This allows technical consumers to immediately test and experiment with the API without writing any code, and it helps semi-technical users see the API in action, bridging the understanding gap. It caters to "various levels of technical depth" perfectly.

Holistic View:

This approach covers the entire lifecycle from design understanding to interactive testing, making it the most effective communication tool.

Analysis of Other Options:

A. Use Anypoint Design Center... give stakeholders access:

This is a poor choice for broad stakeholder communication.

Wrong Tool:

Design Center is a development tool for creating APIs and flows. It is not a communication or documentation tool for a diverse audience.

Information Overload:

Exposing semi-technical users to the actual implementation (flow canvas, code) would be confusing and ineffective.

Security & Clutter:

Granting broad stakeholder access to development projects is a security and management concern.

C. Use Anypoint Exchange to register... and share the RAML definitions:

This is a good start but is insufficient. It only serves the technically skilled consumers who can read and understand API specifications (RAML/OAS). It completely fails to communicate with the semi-technical users who would find a raw RAML file intimidating and unhelpful. It lacks the rich, multi-layered documentation offered by option B.

D. Capture documentation inline... use Anypoint Studio's Export Documentation: This is the least effective option.

Stakeholder-Unfriendly:

The generated HTML documentation is highly technical, focusing on flow components and configuration. It is generated from the implementation perspective, not the consumer or business stakeholder perspective.

Not Centrally Managed:

This creates static HTML files that must be distributed manually. They are not discoverable in Exchange, will not be version-controlled with the API, and quickly become outdated. It defeats the purpose of using the collaborative Anypoint Platform.

Key Concepts/References:

API-Led Connectivity & Reusability: The core value of API-led connectivity is achieved through discoverability and understanding. Exchange is the primary tool to enable this.

Stakeholder Management: An Integration Architect must tailor communication for different audiences (business vs. technical). A one-size-fits-all approach (like sharing RAML or flow docs) fails.

Anypoint Exchange as a Collaboration Hub: Exchange is designed not just as a catalog but as a rich communication platform with pages, examples, and interactive notebooks.

API Notebooks: A powerful feature of the platform specifically designed for interactive documentation and testing, greatly accelerating API adoption.

What is an advantage of using OAuth 2.0 client credentials and access tokens over only API keys for API authentication?

A. If the access token is compromised, the client credentials do not to be reissued.

B. If the access token is compromised, I can be exchanged for an API key

C. If the client ID is compromised, it can be exchanged for an API key

D. If the client secret is compromised, the client credentials do not have to be reissued

Explanation:

This answer correctly identifies a fundamental security benefit of the OAuth 2.0 token model over a simple API key.

Two-Tiered Security Model:

OAuth 2.0 (using the Client Credentials grant type for machine-to-machine communication) introduces a separation between long-term credentials and short-term access tokens.

Client Credentials (Client ID & Secret):

These are the long-term, root-level credentials. They are used only once to authenticate with the authorization server and obtain an access token.

Access Token:

This is a short-lived credential that is actually presented to the API (resource server) for access.

The Advantage in a Breach Scenario:

Scenario:

An access token is accidentally exposed or stolen.

Outcome with OAuth 2.0:

Because the access token is short-lived, the impact is limited. The attacker can only use the token until it expires (which could be minutes or hours). The core, long-term client credentials (ID and Secret) remain safe and do not need to be changed. You simply wait for the token to expire or proactively revoke it if supported.

Outcome with Only an API Key:

An API key is typically a long-lived credential. If it is compromised, it is equivalent to the attacker having the permanent keys to your API. The only recourse is to revoke and reissue the API key, which can cause significant disruption as all applications using that key will break until the new key is distributed.

This separation of concerns (authentication vs. authorization) and the use of short-lived tokens is a core principle of modern API security.

Analysis of Other Options:

B. If the access token is compromised, I can be exchanged for an API key:

This is nonsensical. There is no standard mechanism to "exchange" a compromised OAuth token for an API key. The two are distinct authentication methods. The goal is to avoid using long-lived API keys altogether.

C. If the client ID is compromised, it can be exchanged for an API key:

This is also incorrect and misses the point. The client ID is often considered public information (e.g., it's in mobile app code). The security relies on the client secret being confidential. Compromising a client ID alone is not critical, but compromising it along with the secret is. Exchanging it for an API key is not a security advantage.

D. If the client secret is compromised, the client credentials do not have to be reissued:

This is the exact opposite of the truth and describes a major vulnerability. If the client secret is compromised, the entire set of client credentials is compromised. The attacker can use the client ID and secret to generate new access tokens at will. In this severe scenario, you must immediately reissue new client credentials (a new client secret, and potentially a new client ID). This is a disadvantage compared to an API key only in the sense that both systems would require a reissue, but OAuth's short-lived tokens still provide an advantage by limiting the usefulness of any previously issued access tokens.

Key Concepts/References:

Principle of Least Privilege & Token Scope: OAuth tokens can be scoped to specific permissions, limiting the damage a compromised token can do. A simple API key typically has all-or-nothing access.

Short-Lived Credentials: The use of ephemeral access tokens with optional refresh tokens is a best practice for minimizing the attack surface.

OAuth 2.0 Client Credentials Grant: This is the specific grant type used for machine-to-machine (M2M) authentication where the client is a confidential application, which is the scenario implied when comparing to API keys.

API Key Limitations: Understanding that while API keys are simple, they lack the granular security features (scopes, short lifespan) of a robust token-based system like OAuth 2.0.

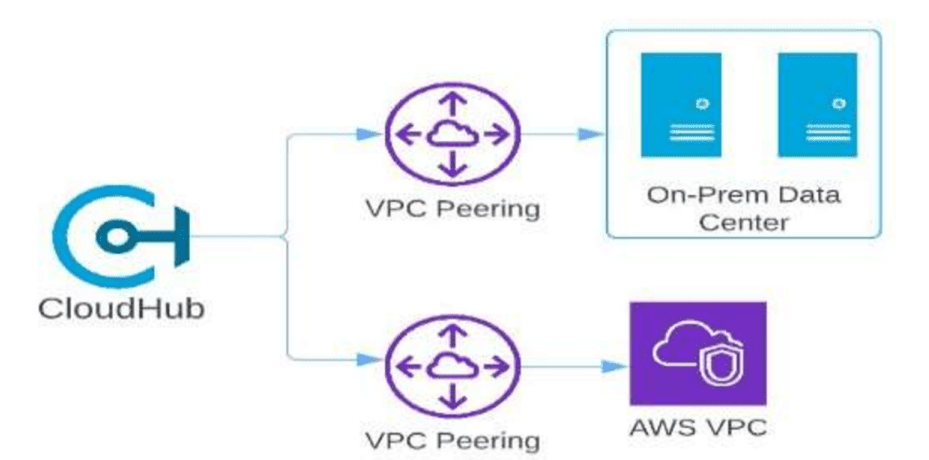

A gaming company has implemented an API as a Mule application and deployed the API implementation to a CloudHub 2.0 private space. The API implementation must connect to a mainframe application running in the customer’s on-premises corporate data center and also to a Kafka cluster running in an Amazon AWS VPC. What is the most efficient way to enable the API to securely connect from its private space to the mainframe application and Kafka cluster?

A. In Runtime Manager, set up VPC peering between the CloudHub 2.0 private network and the on-premises data center.

In the AWS account, set up VPC peering between the AWS VPC and the CloudHub 2.0

private network.

B. In the AWS account, attach the CloudHub 2.0 private space to an AWS transit gateway that routes from the CloudHub 2.0 private space to the on-premises data center. In Runtime Manager, configure an Anypoint VPN to route from the CloudHub 2.0 private space to the AWS VPC.

C. In Runtime Manager, configure an Anypoint VPN to route from the CloudHub 2.0 private space to the on-premises data center. In the AWS account, attach the private..

D. In the AWS account, attach the private space directly to the AWS VPC, In the AWS account, use an AWS transit gateway to route from the AWS VPC to the on-premises data center.

Explanation:

The scenario involves a Mule application deployed in a CloudHub 2.0 private space that needs to securely connect to both an on-premises mainframe application and a Kafka cluster in an AWS VPC. The most efficient and secure approach requires establishing connectivity between the CloudHub private space and both environments while leveraging MuleSoft's and AWS's native networking capabilities. Here's a detailed analysis:

Option C (Correct):

Anypoint VPN to On-Premises Data Center:

Configuring an Anypoint VPN in Runtime Manager allows the CloudHub 2.0 private space to establish a secure, encrypted connection to the customer's on-premises data center. This VPN facilitates communication with the mainframe application over a private network, ensuring security and compliance with on-premises connectivity requirements.

Direct Attachment to AWS VPC:

Attaching the CloudHub 2.0 private space directly to the AWS VPC enables private, secure communication with the Kafka cluster. CloudHub 2.0 supports direct VPC attachment, which simplifies networking by allowing the private space to operate within the AWS VPC network, avoiding the need for additional routing or peering configurations.

Efficiency:

This approach minimizes complexity by using Anypoint VPN for on-premises connectivity and direct VPC attachment for AWS, leveraging existing MuleSoft and AWS integrations. It avoids the overhead of managing multiple VPC peering setups or transit gateways.

Option A (Incorrect):

VPC Peering Between CloudHub and On-Premises:

VPC peering is typically used between AWS VPCs or between CloudHub and AWS VPCs, but it is not a standard method to connect CloudHub to an on-premises data center. Anypoint VPN is the recommended approach for on-premises connectivity in MuleSoft.

VPC Peering Between AWS VPC and CloudHub:

While this can work for connecting to the AWS VPC, it requires additional configuration and maintenance compared to direct VPC attachment, which is more streamlined for CloudHub 2.0 private spaces.

Inefficiency:

Managing two separate VPC peering setups adds complexity and potential points of failure.

Option B (Incorrect):

AWS Transit Gateway to On-Premises:

Attaching the CloudHub private space to an AWS transit gateway that routes to the on-premises data center is possible but requires the on-premises network to be integrated with AWS (e.g., via AWS Direct Connect or VPN). This assumes additional infrastructure that may not be in place and adds complexity.

Anypoint VPN to AWS VPC:

Configuring an Anypoint VPN to route to the AWS VPC is unnecessary since CloudHub 2.0 supports direct VPC attachment, which is a simpler and more efficient method for AWS connectivity.

Inefficiency:

This option overcomplicates the solution by mixing transit gateway routing with an unnecessary VPN to AWS.

Option D (Incorrect):

Direct Attachment to AWS VPC:

This part is correct and aligns with CloudHub 2.0's capability to attach private spaces to an AWS VPC for Kafka cluster connectivity.

AWS Transit Gateway to On-Premises:

Using an AWS transit gateway to route from the AWS VPC to the on-premises data center assumes the on-premises network is already integrated with AWS (e.g., via Direct Connect or VPN). However, this does not address the initial connection from the CloudHub private space to the on-premises data center, leaving a gap in the solution.

Incomplete Solution:

This option lacks a mechanism to securely connect the CloudHub private space to the on-premises data center, making it insufficient.

References:

MuleSoft Documentation: The CloudHub 2.0 documentation details the use of Anypoint VPN for secure connectivity to on-premises networks and direct VPC attachment for AWS integration (see CloudHub Networking section).

AWS Documentation: AWS VPC attachment and transit gateway configurations are outlined in the AWS Networking & Content Delivery documentation, though transit gateways are more relevant for complex multi-VPC routing.

Best Practices: MuleSoft recommends Anypoint VPN for on-premises connectivity and direct VPC attachment for AWS to ensure secure and efficient private space networking.

An integration Mute application consumes and processes a list of rows from a CSV file. Each row must be read from the CSV file, validated, and the row data sent to a JMS queue, in the exact order as in the CSV file. If any processing step for a row falls, then a log entry must be written for that row, but processing of other rows must not be affected. What combination of Mute components is most idiomatic (used according to their intended purpose) when Implementing the above requirements?

A. Scatter-Gather component On Error Continue scope

B. VM connector first Successful scope On Error Propagate scope

C. For Each scope On Error Continue scope

D. Async scope On Error Propagate scope

Explanation:

This combination is the most idiomatic because it directly maps to the requirements of processing a collection (the CSV rows) sequentially and handling errors per item without stopping the overall process.

For Each Scope:

This is the primary component for iterating over a list (the CSV rows). Its default behavior is to process each item sequentially and in order, which perfectly satisfies the requirement to process rows "in the exact order as in the CSV file."

On Error Continue Scope:

This error handler is designed to catch errors within its scope, handle them (e.g., by logging), and then allow the main flow to continue processing. When placed inside the For Each scope, it will catch any error that occurs while processing a single row (e.g., validation failure, JMS connection issue). The error handler can then execute a logging operation for that specific failed row, and the For Each scope will proceed to the next row, ensuring that "processing of other rows must not be affected."

This design is clean, efficient, and uses the components precisely for their intended purposes: iteration and granular error handling.

Analysis of Other Options:

A. Scatter-Gather component On Error Continue scope:

This is a poor choice. The Scatter-Gather component is designed for parallel execution of routes. Using it would process the CSV rows in parallel, which directly violates the requirement for exact order. Furthermore, it's an overly complex component for a simple sequential iteration task.

B. VM connector first Successful scope On Error Propagate scope:

This option suggests an asynchronous, multi-flow design using the VM queue as a buffer. While potentially workable, it is not the most idiomatic or straightforward.

It is more complex, requiring multiple flows and a messaging system (VM) for a task that can be done in a single flow.

The On Error Propagate scope would cause the entire processing of a message (a row) to fail and be sent to the error handler of the main flow. While you could log it there, it's less granular than having the error handling logic directly inside the iteration loop. The Successful scope is redundant in this context.

This approach is "over-engineered" for the stated requirements.

D. Async scope On Error Propagate scope: This is incorrect for several reasons.

The Async Scope processes its content in a separate thread, decoupled from the main flow. There is no guarantee that the order of processing will be maintained, which breaks the core requirement of exact order.

Like option B, the On Error Propagate scope would cause the entire async block to fail. If this async block is processing a batch of rows, the entire batch might fail. If it's per row (which would be very inefficient), the error would still be less conveniently handled than with an On Error Continue scope inside a For Each.

Key Concepts/References:

Iteration:

Use the For Each scope for sequential processing of a collection. Use parallel processing components (Async, Scatter-Gather) only when order does not matter.Error Handling Strategy:

On Error Propagate:

Stops the current execution and signals the error to the parent flow. Use this when an error should be considered a failure for the entire transaction.

On Error Continue:

Handles the error locally and allows the parent flow to continue as if no error occurred. Use this for non-critical errors within a larger process, exactly as described in the requirement.

Idiomatic Mule Design:

The simplest, most direct solution that uses components for their primary purpose is typically the best. For Each with an internal On Error Continue is the standard pattern for "process all items, log failures, and keep going."

| Page 6 out of 28 Pages |

| 2345678910 |

| Salesforce-MuleSoft-Platform-Integration-Architect Practice Test Home |

Our new timed Salesforce-MuleSoft-Platform-Integration-Architect practice test mirrors the exact format, number of questions, and time limit of the official exam.

The #1 challenge isn't just knowing the material; it's managing the clock. Our new simulation builds your speed and stamina.

You've studied the concepts. You've learned the material. But are you truly prepared for the pressure of the real Salesforce Certified MuleSoft Platform Integration Architect - Mule-Arch-202 exam?

We've launched a brand-new, timed Salesforce-MuleSoft-Platform-Integration-Architect practice exam that perfectly mirrors the official exam:

✅ Same Number of Questions

✅ Same Time Limit

✅ Same Exam Feel

✅ Unique Exam Every Time

This isn't just another Salesforce-MuleSoft-Platform-Integration-Architect practice questions bank. It's your ultimate preparation engine.

Enroll now and gain the unbeatable advantage of: