Total 202 Questions

Last Updated On : 4-Feb-2026

Preparing with Salesforce-Platform-Developer-II practice test is essential to ensure success on the exam. This Salesforce allows you to familiarize yourself with the Salesforce-Platform-Developer-II exam questions format and identify your strengths and weaknesses. By practicing thoroughly, you can maximize your chances of passing the Salesforce certification 2026 exam on your first attempt. Surveys from different platforms and user-reported pass rates suggest Salesforce Certified Platform Developer II - Plat-Dev-301 practice exam users are ~30-40% more likely to pass.

A developer implemented a custom data table in a Lightning web component with filter functionality. However, users are submitting support tickets about long load times when the filters are changed. The component uses an Apex method that is called to query for records based on the selected filters. What should the developer do to improve performance of the component?

A. Return all records into a list when the component is created and filter the array In JavaScript.

B. Use a selective SOQL query with a custom Index.

C. Use SOSL to query the records on filter change.

D. Use setstoraclel() in the Apex method to store the response In the client-side cache.

Explanation:

🔍 Question Context Recap

A Lightning Web Component (LWC) is experiencing long load times when filters are changed. Each time the user changes a filter, an Apex method is invoked that queries Salesforce records using SOQL (Salesforce Object Query Language). The issue is clearly related to inefficient querying, causing performance bottlenecks.

✅ Correct Answer: B. Use a selective SOQL query with a custom Index

🔧 What is a Selective SOQL Query?

A selective SOQL query is a query that uses selective filters (like indexed fields) to limit the number of records retrieved from the database. Salesforce strongly encourages the use of selective queries because they optimize performance, especially in large data sets.

A query is considered selective when it filters on an indexed field and does not scan a large percentage of the data (generally less than 10% of records in a large object).

Non-selective queries can lead to full table scans, which are expensive and slow, especially when the object contains hundreds of thousands or millions of records.

🔎 What is a Custom Index?

While some fields (e.g., Id, Name, CreatedDate, LastModifiedDate) are automatically indexed by Salesforce, custom fields are not indexed by default.

However, Salesforce allows you to request a custom index on a field by contacting Salesforce Support. Fields that are often good candidates for custom indexing include:

Fields frequently used in WHERE clauses.

Fields used in filters on reports or Apex queries.

Fields with a good amount of selectivity (i.e., the field values vary widely across records).

✅ Why This Solves the Problem:

When filters are applied in the LWC, the Apex method uses them in a SOQL query.

If that query is non-selective (e.g., filtering on a non-indexed custom field), Salesforce may scan the entire table to find matching records, causing delays.

By indexing the relevant filter fields and designing the SOQL query to use highly selective filters, the query performance improves drastically, reducing the page’s loading time.

❌ Explanation of Incorrect Options:

❌ A. Return all records into a list when the component is created and filter the array in JavaScript

Why it’s wrong:

This approach means loading all records at once into the client (browser) — which is a huge performance and scalability issue.

It violates the principle of lazy loading or on-demand querying, which is necessary in applications with potentially thousands of records.

It may hit governor limits (especially the heap size limit) in Apex, leading to runtime errors.

It’s also a security risk, as users might receive data they are not authorized to see.

❌ C. Use SOSL to query the records on filter change

Why it’s wrong:

SOSL (Salesforce Object Search Language) is designed for full-text search across multiple objects.

It is optimal when you need to search across multiple fields or multiple objects for a keyword (e.g., global search).

In this scenario, you are querying specific fields with filters, so SOQL is the correct tool.

SOSL is not filter-oriented and does not support complex filtering like SOQL.

Using SOSL may actually lead to less accurate or irrelevant results and poor performance if misused.

❌ D. Use setStorable() in the Apex method to store the response in the client-side cache

Why it’s wrong:

setStorable() is used to cache Apex method results on the client side to avoid repeat server trips. While it helps reduce server round-trips, it does not improve the performance of the Apex method itself.

In this case, the problem occurs every time filters change, triggering a new query, which means the results are not cached unless the exact same filter was used before.

It’s a good technique for read-heavy, rarely changing data, but not useful when the filters dynamically change.

📘 Real-World Example and Reference:

Imagine querying a Case__c object with millions of records. If you filter on a field like Status__c which is not indexed, the query may take seconds or timeout. But if you create a custom index on Status__c and make sure the value being filtered occurs in fewer than 10% of records (good selectivity), your query may execute in milliseconds.

Salesforce Best Practices Documentation:

SOQL Performance Tuning

Using Selective Queries

Custom Indexes in Salesforce

A developer is inserting, updating, and deleting multiple lists of records in a single transaction and wants to ensure that any error prevents all execution. How should the developer implement error exception handling in their code to handle this?

A. Use Database methods to obtain lists of Database.SaveResults.

B. Use a try-catch statement and handle DML cleanup in the catch statement,

C. Use Database.setSavepoint {} and Database.rollBack with a try-catch statement.

D. Use a try-catch and use sObject.addError() on any failures.

Explanation:

When a developer is performing multiple data manipulation operations—such as inserting, updating, or deleting lists of records—in a single transaction, maintaining data integrity is crucial. This means that either all operations should succeed or none at all. If any one of the operations fails, the system should revert to the original state as if nothing had happened. This is the foundational principle of atomicity in transactional systems.

The correct way to enforce this behavior in Salesforce Apex is to use a combination of Database.setSavepoint() and Database.rollback() within a try-catch block. This allows the developer to define a specific point in time (a "savepoint") before any DML (Data Manipulation Language) operations are performed. If an exception occurs at any point during the sequence of operations, the code within the catch block can execute a rollback to that predefined savepoint, effectively undoing all the changes made after the savepoint was set. This ensures that the database remains in a consistent state and that no partial or unintended data changes occur.

This approach is especially important in complex business logic where multiple objects or large sets of data are involved, and the failure of even one operation should invalidate the entire transaction. Without this mechanism, Apex's default behavior would only roll back the operation that failed, while any previous successful DML statements would remain committed—leading to inconsistent data.

To reinforce:

→ Savepoints provide manual control over transaction checkpoints.

→ Rollbacks to a savepoint undo all operations that occurred after it.

→ This method guarantees all-or-nothing execution within a single transaction.

Using Database.setSavepoint() and Database.rollback() not only improves error resilience but also aligns with best practices for ensuring data consistency and system reliability in enterprise-level Salesforce applications.

A Salesforce Platform Developer is leading a team that is tasked with deploying a new application to production. The team has used source-driven development, and they want to ensure that the application is deployed successfully. What tool or mechanism should be used to verify that the deployment is successful?

A. Force.com Migration Tool

B. Salesforce DX CLI

C. Apex Test Execution

D. Salesforce Inspector

Explanation:

✅ Correct Answer: B. Salesforce DX CLI

Salesforce DX CLI (Command Line Interface) is the correct choice because it is the primary tool used in source-driven development environments on the Salesforce platform. This tool enables developers and DevOps teams to manage the full lifecycle of a Salesforce application, from source code to deployment. In source-driven development, all metadata and code are stored in a version control system (such as Git), and changes are pushed and pulled from different Salesforce orgs using tools like the DX CLI.

The Salesforce DX CLI provides powerful commands to not only deploy metadata to production but also to validate deployments, run Apex tests, track changes, and confirm that deployment was successful. Since this CLI can return detailed status codes, logs, and test outcomes after deployment, it gives developers confidence that the new application is working as expected. Additionally, it is designed to integrate seamlessly into continuous integration/continuous delivery (CI/CD) pipelines, making it an essential component in modern Salesforce DevOps strategies. This aligns perfectly with the scenario described, where the developer wants to ensure a reliable and validated deployment using a tool built specifically for source-driven workflows.

❌ Option A: Force.com Migration Tool

The Force.com Migration Tool is an older, Ant-based tool that uses package.xml files and ZIP archives to deploy metadata to Salesforce environments. While it is capable of performing deployments, it lacks many of the modern capabilities and conveniences offered by the Salesforce DX CLI. This tool is typically used in more traditional or legacy Salesforce development workflows and doesn't provide a native way to confirm deployment success beyond what is printed in the console logs.

It also does not integrate well with version control or CI/CD pipelines, making it less suitable for source-driven development environments. Additionally, it lacks built-in functionality for running Apex tests or verifying code coverage automatically during deployment. In a modern Salesforce DevOps team that prioritizes automation, validation, and traceability, the Force.com Migration Tool would be considered outdated. As such, it would not be the recommended tool for verifying successful deployment of an application in a source-controlled, team-led release process.

❌ Option C: Apex Test Execution

Apex Test Execution refers to the process of running unit tests written in Apex to validate the logic and behavior of code. While running these tests is a crucial part of ensuring application quality, this option is not a complete deployment verification solution on its own. Apex tests are usually run after a deployment to ensure that the deployed logic behaves as intended. However, they do not verify whether the deployment itself was structurally or operationally successful.

For instance, Apex Test Execution does not indicate whether metadata components (like Lightning components, custom objects, or validation rules) were deployed correctly. It also doesn’t manage the deployment process or provide metadata coverage analysis, both of which are essential when verifying the full success of a deployment. Therefore, while useful in validation, Apex Test Execution is not the correct tool for confirming deployment success in a source-driven development lifecycle.

❌ Option D: Salesforce Inspector

Salesforce Inspector is a browser-based extension that developers use to inspect data, view metadata fields, perform quick queries, and export data. It is a useful productivity tool for viewing field values and record data directly in the Salesforce UI. However, it is not a tool designed for application deployment, nor can it validate whether a deployment has succeeded. It does not interact with the deployment pipeline or provide logs, test results, or deployment summaries.

Since it functions only within the browser and on the front end, its purpose is limited to inspecting and editing individual records and fields — not managing or confirming deployments. As such, while valuable in daily administrative or debugging tasks, Salesforce Inspector does not support source-driven development workflows and cannot be used to ensure a successful deployment of an application to production.

Reference:

Salesforce DX Overview

A developer writes a Lightning web component that displays a dropdown list of all custom objects in the org from which a user will select. An Apex method prepares and returns data to the component. What should the developer do to determine which objects to include in the response?

A. Check the isCustom() value on the sObject describe result.

B. Import the list of all custom objects from @salesforce/schema.

C. Check the getobiectType [) value for ‘Custom’ or "Standard’ on the sObject describe result.

D. Use the getcustomobjects() method from the Schema class.

Explanation:

✅ Correct Answer: A. Check the isCustom() value on the sObject describe result

The correct approach for identifying custom objects in Salesforce is by using the isCustom() method on the results obtained from the sObject Describe API. This method is part of the DescribeSObjectResult class in the Apex Schema namespace and returns a Boolean value that tells whether a particular object is custom (true) or standard (false). When a developer writes an Apex method to support a Lightning Web Component (LWC) that displays a dropdown list of only custom objects, the Apex code would typically use the Schema.getGlobalDescribe() method to retrieve a map of all sObjects in the org.

Then, for each object, the developer would call describe() to get its metadata and invoke isCustom() to filter only those with a true result. This method is both reliable and native to Salesforce's metadata introspection tools, making it the most appropriate way to dynamically identify and return only custom objects. This enables the LWC to display only the relevant options without hardcoding any object names or relying on schema imports, which could become outdated or inflexible.

❌ Option B: Import the list of all custom objects from @salesforce/schema

This option is incorrect because importing objects using the @salesforce/schema directive in LWC is designed for statically referencing individual fields or objects, not dynamically listing them. In other words, @salesforce/schema requires that the object or field be explicitly named at design time, and it cannot be used to retrieve a complete or dynamic list of all custom objects.

Moreover, @salesforce/schema is a compile-time construct, which means it does not support the level of runtime introspection that is needed for a component meant to list all custom objects in a flexible and dynamic way. Using this approach would defeat the purpose of using Apex for dynamic metadata access, and it would require hardcoded imports for every potential custom object, which is both impractical and non-scalable in real-world Salesforce orgs.

❌ Option C: Check the getObjectType() value for 'Custom' or 'Standard' on the sObject describe result

This option is incorrect because the getObjectType() method in Salesforce Apex does not return a string indicating whether the object is "Custom" or "Standard." Instead, getObjectType() returns an instance of Schema.SObjectType, which refers to the type of the object but not its classification as custom or standard.

Therefore, there's no way to extract a direct textual label like "Custom" or "Standard" from getObjectType(). This makes the approach described in this option technically invalid for the purpose of distinguishing between custom and standard objects. The only correct and supported method to achieve this is isCustom(), which directly returns a boolean indicating the object type.

❌ Option D: Use the getCustomObjects() method from the Schema class

This option is incorrect because the Schema class in Apex does not have a method called getCustomObjects(). While the Schema namespace provides powerful reflection capabilities, such as getGlobalDescribe() and various describe calls on individual objects and fields, there is no method specifically designed to return only custom objects in one call.

Developers must retrieve all sObjects using Schema.getGlobalDescribe(), and then programmatically filter them using describe().isCustom() for each entry. The method described in this option simply does not exist, which makes it technically incorrect and misleading in a Salesforce Apex context.

Reference:

Apex Reference Guide

Apex Describe Information

A company notices that their unit tests in a test class with many methods to create many records for prerequisite reference data are slow. What can a developer to do address the issue?

A. Turn off triggers, flows, and validations when running tests.

B. Move the prerequisite reference data setup to a TestDataFactory and call that from each test method.

C. Move the prerequisite reference data setup to a @testSetup method in the test class.

D. Move the prerequisite reference data setup to the constructor for the test class.

Explanation:

✅ Correct Answer: C. Move the prerequisite reference data setup to a @testSetup method in the test class 🧪

The @testSetup annotation allows developers to define a method that creates data once and shares it across all test methods within the class. This approach is specifically optimized for performance, as it avoids repeated DML operations for the same records, significantly reducing the overall test execution time.

When multiple test methods require identical reference data—such as sample accounts, contacts, or custom objects—@testSetup ensures consistency and efficiency. Salesforce internally clones the data for each method to keep tests independent while eliminating redundant setup. This results in faster, more maintainable, and scalable tests.

It also promotes better code organization by separating common setup logic from the actual test assertions. Overall, @testSetup is the best practice for shared data initialization in Apex test classes, especially when improving performance is a concern.

❌ Option A: Turn off triggers, flows, and validations when running tests 🚫

Disabling triggers, flows, or validations globally during test execution is not allowed in Salesforce. The platform is designed to simulate the real runtime environment, so attempting to bypass automation would undermine the integrity and accuracy of tests.

While you can conditionally bypass logic in some triggers using flags or static variables, this is typically used for isolating unit tests, not enhancing performance. Even then, it must be implemented very carefully to avoid missing important coverage or regression issues.

Additionally, turning off declarative tools like flows or validation rules programmatically is not possible within Apex. For this reason, this option is both technically unfeasible and not aligned with Salesforce’s testing philosophy.

❌ Option B: Move the prerequisite reference data setup to a TestDataFactory and call that from each test method 🏭

Using a TestDataFactory class is a solid practice for improving modularity and code reuse in test data creation. It allows teams to centralize record creation logic and avoid code duplication across test classes.

However, calling the factory method from each individual test method means the data is recreated every time. This results in unnecessary DML operations and slower test execution, especially when tests are run in bulk or as part of continuous integration.

In contrast, @testSetup generates the data once and shares it internally, which is far more efficient. So while TestDataFactory improves structure, it does not solve the performance issue described in the question.

❌ Option D: Move the prerequisite reference data setup to the constructor for the test class 🏗️

Test classes in Apex are not designed to use constructors for setting up test data. Although constructors can be defined, their behavior in test execution is not reliable or recommended for initializing DML records.

Apex testing is structured around the use of annotated methods like @isTest and @testSetup, which the platform handles with predictable execution and isolation guarantees. Relying on constructors for data creation breaks this pattern and leads to fragile, confusing test logic.

Moreover, Salesforce documentation and best practices do not recommend using constructors in test classes for anything beyond simple initialization. This option is unsupported and ineffective for ensuring consistent, efficient test setup.

Reference:

Apex Developer Guide

An org has a requirement that an Account must always have one and only one Contact listed as Primary. So selecting one Contact will de-select any others. The client wants a checkbox on the Contact called 'Is Primary’ to control this feature. The client also wants to ensure that the last name of every Contact is stored entirely in uppercase characters. What is the optimal way to implement these requirements?

A. Write a Validation Rule on the Contact for the Is Primary logic and a before update trigger on Contact for the last name logic.

B. Write an after update trigger on Contact for the Is Primary logic and a separate before update trigger on Contact for the last name logic.

C. Write a single trigger on Contact for both after update and before update and callout to helper classes to handle each set of logic.

D. Write an after update trigger on Account for the Is Primary logic and a before update trigger on Contact for the last name logic.

Explanation:

✅ Correct Answer: C. Write a single trigger on Contact for both after update and before update and call out to helper classes to handle each set of logic 🧠

This is the optimal solution because it efficiently separates concerns while maintaining performance, scalability, and testability. Salesforce encourages developers to follow best practices by using a single trigger per object and delegating business logic to helper classes. This architecture ensures clean, modular code that is easier to maintain and extend.

The requirement to enforce only one Contact marked as "Is Primary" per Account needs to be handled after DML because it may require reviewing or updating other sibling contacts—records not necessarily in the trigger context. Therefore, it’s appropriate to handle this logic in the after update or after insert context, where all related records can be queried and modified accordingly.

On the other hand, the requirement to store the Contact’s last name in uppercase is best addressed in the before update or before insert context. This way, the data is modified before it hits the database, avoiding unnecessary updates or recursion. By combining both trigger events (before and after) in a single trigger, and delegating the logic to a helper class or service class, the solution remains clean and adheres to Salesforce's governor limits and coding standards.

❌ Option A: Write a Validation Rule on the Contact for the Is Primary logic and a before update trigger on Contact for the last name logic ⚠️

Using a Validation Rule to enforce the "only one primary contact per account" requirement is not viable. Validation rules cannot perform cross-record comparisons or updates. They can only evaluate conditions on the current record or related parent fields, not sibling records. Since this use case requires scanning all Contacts related to an Account to ensure only one is marked as primary, a validation rule cannot satisfy this constraint.

While the second part—converting the last name to uppercase—is fine in a before update trigger, relying on validation rules for complex data integrity enforcement that involves multiple records leads to limitations. This approach would leave the primary contact logic incomplete and prone to failure if multiple users attempt updates concurrently.

❌ Option B: Write an after update trigger on Contact for the Is Primary logic and a separate before update trigger on Contact for the last name logic 🧩

While technically correct, this approach violates the “one trigger per object” best practice. Having multiple triggers on the same object leads to maintenance problems, ordering conflicts, and increased risk of recursion or redundant logic. Salesforce does not guarantee trigger execution order when more than one trigger exists for the same object and event, which can lead to unpredictable behavior.

Moreover, splitting the logic into separate triggers for before update and after update increases complexity and decreases traceability. Even though this would work functionally, it is not optimal for long-term maintainability and testability. A unified trigger with clear delegation to helper classes is the more scalable solution.

❌ Option D: Write an after update trigger on Account for the Is Primary logic and a before update trigger on Contact for the last name logic ❌

This option mistakenly places the primary contact logic on the Account object, which is incorrect. The checkbox indicating whether a Contact is primary resides on the Contact record, and the logic that ensures only one is selected must execute in response to Contact changes, not Account changes. Using an Account trigger for this would require querying and acting upon unrelated child records and could introduce unnecessary complexity and performance issues.

Additionally, although the before update trigger for capitalizing the last name is appropriate, splitting the logic between two object triggers and two different scopes complicates the codebase. This design is not aligned with the principle of handling logic on the object that owns the field being changed.

Reference:

Trigger Context Variables

Trigger and Bulk Request Best Practices

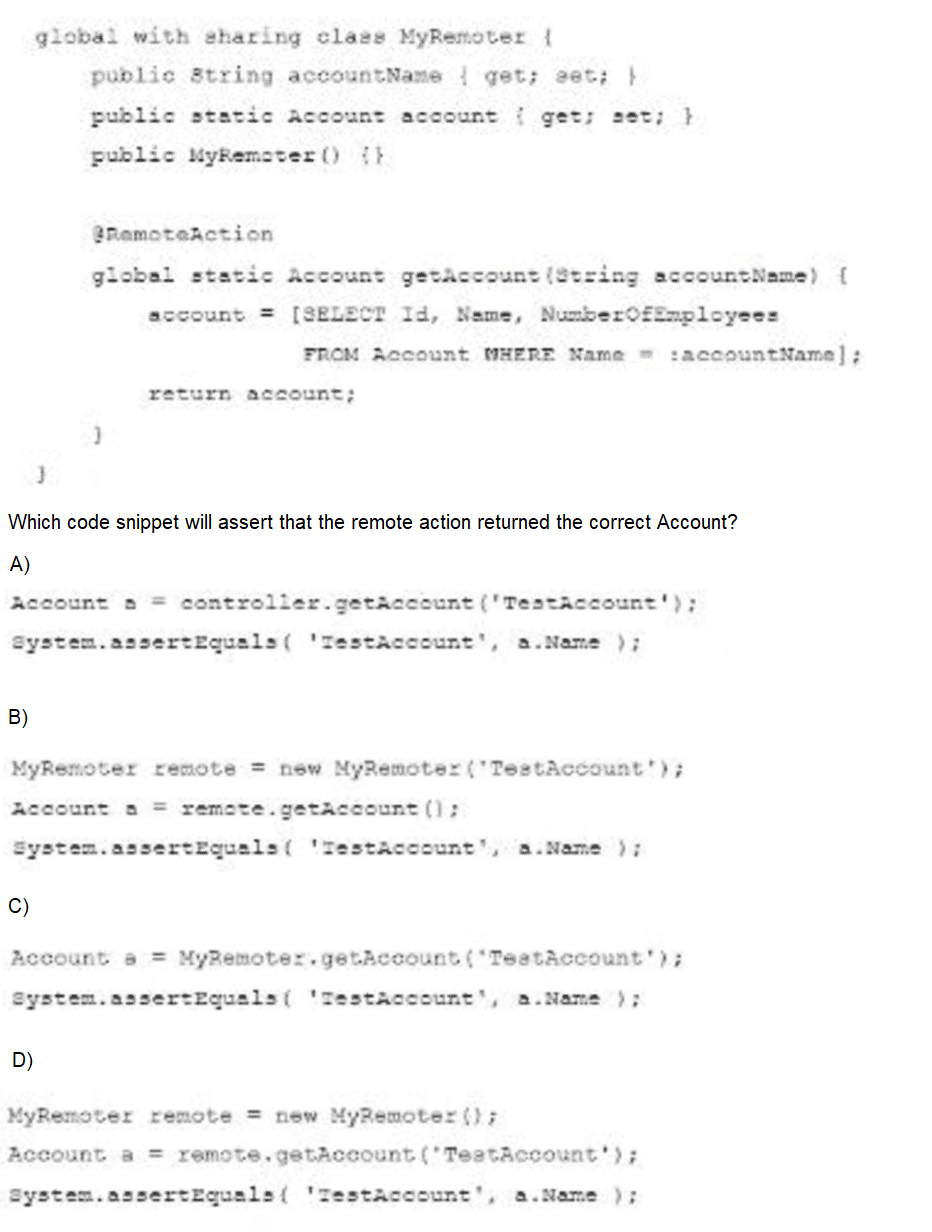

Consider the Apex class below that defines a RemoteAction used on 2 Visualforce search page.

A. Option A

B. Option B

C. Option C

D. Option D

Explanation:

In the provided code, the class MyRemoter defines a static method marked with the @RemoteAction annotation:

@RemoteAction

global static Account getAccount(String accountName)

⇨ This method takes an accountName string as a parameter, performs a SOQL query to fetch the Account with that name, and returns it.

⇨ Since getAccount() is a static method, the correct way to call it in Apex is directly via the class name — MyRemoter.getAccount('TestAccount') — as shown in Option C. There's no need to instantiate the class to access a static method, which makes this the cleanest and most appropriate syntax.

⇨ Once the Account object is returned, it is compared using System.assertEquals() to verify the name matches "TestAccount". This is exactly the right approach to assert that the correct Account record was returned by the remote action.

❌ Why the Other Options Are Incorrect:

A)

Account a = controller.getAccount('TestAccount');

System.assertEquals('TestAccount', a.Name);

🔴 Incorrect because controller is undefined in the context. The code assumes the existence of a controller variable or object, which has not been declared. Furthermore, the method getAccount() is a static method in MyRemoter, so it should be called using the class name — not via an instance or another object.

B)

MyRemoter remote = new MyRemoter('TestAccount');

Account a = remote.getAccount();

System.assertEquals('TestAccount', a.Name);

🔴 Incorrect because it tries to instantiate the MyRemoter class with a constructor that accepts a string ('TestAccount'), but no such constructor exists. In the class code shown, only a default constructor (public MyRemoter() {}) is defined. This would cause a compilation error. Also, getAccount() is static, so it should not be called via an instance (remote.getAccount()).

D)

MyRemoter remote = new MyRemoter();

Account a = remote.getAccount('TestAccount');

System.assertEquals('TestAccount', a.Name);

🔴 Incorrect because even though it uses the correct parameter format ('TestAccount'), it still calls the static method getAccount() via an instance (remote.getAccount(...)) instead of the class itself. This is syntactically allowed in Apex but is discouraged and misleading, especially in test code. Static methods should always be invoked using the class name, not an object instance.

Reference:

Apex Developer Guide

JavaScript Remoting for Apex Controllers

Universal Charities (UC) uses Salesforce to collect electronic donations in the form of credit

card deductions from individuals and corporations.

When a customer service agent enters the credit card information, it must be sent to 8 3rd-party payment processor for the donation to be processed, UC uses one payment processor for individuals and a different one for corporations.

What should a developer use to store the payment processor settings for the different payment processors, so that their system administrator can modify the settings once they are deployed, if needed?

A. Custom object

B. Custom metadata

C. Hierarchy custom setting

D. List custom setting

Explanation:

🧩 Why Custom Metadata is the Best Choice

Custom metadata is the optimal solution in this scenario because it allows you to store configuration or integration settings (such as URLs, credentials, or routing logic for third-party processors) that are deployable, version-controlled, and modifiable by administrators through the Salesforce UI without requiring changes to code.

For Universal Charities (UC), the system needs to store and manage settings for two separate third-party payment processors — one for individuals and another for corporations. These settings could include endpoint URLs, authentication keys, or processing rules. By using custom metadata, these processor configurations become:

→ Environment-aware: You can create records for different environments (like UAT, staging, production) and reference them in code.

→ Modifiable by admins: Admins can adjust these records using the UI or via deployment without developer assistance.

→ Deployable: Unlike custom settings, custom metadata types and records can be deployed via change sets, Salesforce DX, or Ant Migration Tool, ensuring consistency across orgs.

This approach promotes low-code configuration and avoids hardcoding sensitive logic or environment-specific details into Apex classes. It's also future-proof—if more payment processors are added, admins can simply create new metadata records without requiring additional code deployments.

❌ Why the Other Options Are Incorrect

A. Custom Object

Custom objects are typically used for business data, not configuration. While it’s possible to use a custom object to store processor settings, it introduces overhead and lacks native support for packaging and deployment of data. You would also need to add security layers to prevent data visibility issues. Additionally, custom objects are not protected against end-user changes in the same way as metadata.

➡️ In short: Custom objects are too heavy and not intended for configuration metadata that needs to be easily deployable and editable.

C. Hierarchy Custom Setting

Hierarchy custom settings are useful when you need user- or profile-specific settings, such as individual user preferences. In this case, the processor configuration is not user-specific, but rather organization-wide and static, depending on whether the donation comes from an individual or corporation. Furthermore, custom settings cannot be deployed with data via change sets or Salesforce DX, making them harder to manage across environments.

➡️ In short: Not suitable because you’re not managing user-specific behavior or access.

D. List Custom Setting

List custom settings are similar to a basic key-value store. They allow you to store settings accessible across the org, but like hierarchy settings, you cannot deploy their records with change sets or version them in source control. Admins must manually update them in each environment. This adds friction and makes the approach error-prone, especially for integrations.

➡️ In short: Limited deployment and versioning capabilities make it a weaker option than custom metadata.

Reference:

How Salesforce Support uses Login Access in customer support?

Universal Containers implements a private sharing model for the Convention Attendee co custom object. As part of a new quality assurance effort, the company created an Event_Reviewer_c user lookup field on the object. Management wants the event reviewer to automatically gain ReadWrite access to every record they are assigned to. What is the best approach to ensure the assigned reviewer obtains Read/Write access to the record?

A. Create a before insert trigger on the Convention Attendee custom object, and use Apex Sharing Reasons and Apex Managed Sharing.

B. Create an after insert trigger on the Convention Attendee custom object, and use Apex Sharing Reasons and Apex Managed Sharing.

C. Create criteria-based sharing rules on the Convention Attendee custom object to share the records with the Event Reviewers.

D. Create a criteria-based sharing rule on the Convention Attendee custom object to share the records with a group of Event Reviewers.

Explanation:

✅ Correct Answer: B — After insert trigger with Apex Sharing Reasons and Managed Sharing

🔐 Why this works:

When implementing Apex Managed Sharing, Salesforce requires that the record already exist in the database so it has a valid record ID. This ID is necessary for creating a custom Share record that links the record to the user in the Event_Reviewer__c field. Because of this, the logic to share the record must happen after the insert — which is why the correct place is an after insert trigger.

🛠 Managed Sharing gives full control over access logic in Apex. By using a custom Apex Sharing Reason, you can apply named reasons for the share (e.g., “Assigned Reviewer Access”), and admins can later manage them through the UI if needed. This also keeps sharing logic dynamic and scalable, suitable for future changes.

📚 Use Case Fit:

This solution supports the requirement that access is automatically granted when a user is assigned as a reviewer. It works with private sharing models, ensures proper access is granted without compromising security, and is admin-friendly due to its maintainability.

❌ A — Before insert trigger with Apex Managed Sharing

🚫 Why it's wrong:

In a before insert trigger, the record does not yet have a Salesforce ID, which is essential to create a valid Share object in Apex. Since Apex Managed Sharing requires the ParentId (record ID) to associate the shared record to the target user, this method fails technically. The trigger would attempt to share a record that doesn’t yet exist — leading to runtime errors or unintended behavior.

💡 Key Concept:

Sharing must occur after the database transaction, which makes this a clear architectural limitation of before triggers.

❌ C — Criteria-based sharing rule for Event Reviewers

🚫 Why it's wrong:

Criteria-based sharing rules can only apply access based on record field values — not based on dynamic user lookups like Event_Reviewer__c. You can’t configure them to assign access directly to the user listed in a lookup field. They also only support role, group, or public access, not individual user-based field references.

⚠️ Limitation:

They are designed for bulk sharing to groups of users, not one-to-one sharing based on lookup assignments. Therefore, they can’t fulfill the requirement to grant access dynamically to the user assigned.

❌ D — Share with a group of Event Reviewers

🚫 Why it's wrong:

This would share every Convention Attendee record with a static group of users, regardless of who is assigned as Event_Reviewer__c. This does not meet the requirement of only granting access to the specific assigned reviewer. Also, if reviewers change or rotate frequently, managing group membership would become cumbersome and error-prone.

📉 Problem:

It lacks flexibility, introduces overhead, and fails to dynamically reflect the lookup field changes.

Reference:

Apex Managed Sharing

Salesforce users consistently receive a "Maximum trigger depth exceeded” error when saving an Account. How can a developer fix this error?

A. Split the trigger logic into two separate triggers.

B. Convert the trigger to use the 3suzure annotation, and chain any subsequent trigger invocations to the Account object.

C. Modify the trigger to use the L=MultiThread=true annotation,

D. Use a helper class to set a Boolean to TRUE the first time a trigger 1s fired, and then modify the trigger ta only fire when the Boolean is FALSE.

Explanation:

✅ Correct Answer: D — Use a helper class to set a Boolean to TRUE the first time a trigger is fired, and then modify the trigger to only fire when the Boolean is FALSE

🧠 Why this Works

The error "Maximum trigger depth exceeded" occurs in Salesforce when a trigger calls another DML operation (like insert, update, or delete) that recursively re-invokes the same trigger — potentially creating an infinite loop. Since Salesforce has a limit (typically 16 levels of recursion), exceeding this leads to this error.

To prevent this, developers use a helper class (commonly known as a trigger handler or recursion guard) to track whether the trigger has already run for the current execution context. A simple Boolean flag like hasAlreadyFired can be set to TRUE after the first execution. In subsequent trigger executions, the flag is checked before running the logic again. This breaks the recursion cycle and allows controlled logic execution.

🔁 Typical Flow Example:

➳ Trigger on Account → Updates related records → Triggers Account update again → infinite loop

➳ Using a static Boolean (TriggerControl.hasRun) breaks this loop by skipping execution on second pass.

🛠 Advantages:

➳ Prevents trigger recursion errors

➳ Keeps code efficient and predictable

➳ Easy to maintain and apply across multiple triggers

❌ Why the Other Options Are Incorrect

A. Split the trigger logic into two separate triggers

🔻 Why it's wrong:

Salesforce does not allow multiple triggers on the same object/event (e.g., two before insert triggers on Account) to run independently in isolation. Even if you split logic across different triggers, they still execute together and cannot solve recursion unless you manage state. So this doesn’t solve the root cause — uncontrolled recursion.

B. Convert the trigger to use the @future annotation and chain any subsequent trigger invocations

🚫 Why it's wrong:

The @future annotation is used for asynchronous processing but cannot be used to chain synchronous trigger execution or prevent recursion. Also, @future methods cannot be called from another future method or batch job, making them unsuitable for chaining logic. This approach is more suited for callouts or non-critical background tasks, not recursion control.

C. Modify the trigger to use the @MultiThread=true annotation

⚠️ Why it's wrong:

This annotation does not exist in Apex. It seems to be a misstatement or confusion with multithreading in other platforms. Salesforce Apex operates in a single-threaded environment where operations are executed sequentially in a single execution context.

Reference:

Apex Developer Guide

| Page 1 out of 21 Pages |

Our new timed Salesforce-Platform-Developer-II practice test mirrors the exact format, number of questions, and time limit of the official exam.

The #1 challenge isn't just knowing the material; it's managing the clock. Our new simulation builds your speed and stamina.

You've studied the concepts. You've learned the material. But are you truly prepared for the pressure of the real Salesforce Certified Platform Developer II - Plat-Dev-301 exam?

We've launched a brand-new, timed Salesforce-Platform-Developer-II practice exam that perfectly mirrors the official exam:

✅ Same Number of Questions

✅ Same Time Limit

✅ Same Exam Feel

✅ Unique Exam Every Time

This isn't just another Salesforce-Platform-Developer-II practice questions bank. It's your ultimate preparation engine.

Enroll now and gain the unbeatable advantage of:

✅ Covers advanced Apex, integration patterns, asynchronous processes, and design patterns

✅ Realistic, exam-level questions based on the latest Salesforce updates

✅ Detailed explanations for every answer — know not just what’s right, but why

✅ Helps you master real-world problem-solving, not just memorize facts

✅ Unlimited test resets + performance tracking